Using Cgroups v2 to limit system I/O resources

Introduction to Cgroups

Cgroups, which called control group in Linux to limit system resources for specify process group, is comonly used in many container tech, such as Docker, Kubernetes, iSulad etc.

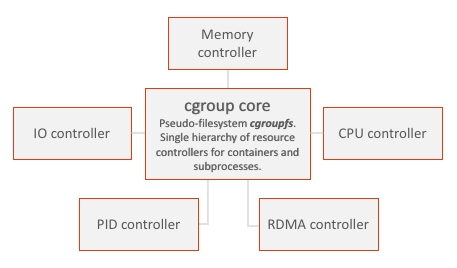

The cgroup architecture is comprised of two main components:

- the cgroup core: which contains a pseudo-filesystem cgroupfs,

- the subsystem controllers: thresholds for system resources, such as memory, CPU, I/O, PIDS, RDMA, etc.

The hierarchy of Cgroups

In Cgroups v1, per-resource (memory, cpu, blkio) have it’s own hierarchy, where each resource hierarchy contains cgroups for that resource. The location of cgroup directory is /sys/fs/cgroup/.

|

|

User can create sub cgroup in every resource diectory to limit the process usage of the resource.

Directory tree above shows the system resources can be control. #{TODO}

Unlike cgroup v1, v2 has only single hierarchy.

|

|

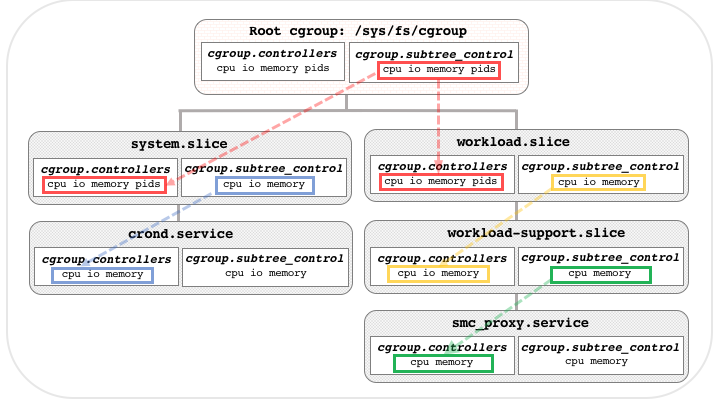

The /sys/fs/cgroup is the root cgroup. In Figure below shows the relationship between parent cgroup and child cgroup.

File cgroup.controllers lists the controllers available in this cgroup, the root cgroup has all the controllers on the system.

File cgroup.subtree_control has the resource can be limited in it’s child cgroup. Those limits can be inherited by sub cgroup.

The child cgroup’s cgroup.controllers will generate by it’s parent cgroup’s cgroup.subtree_control file.

|

|

This cgroup can limit cpuset, cpu, I/O, memory, rdma and pids, and it’s child cgroup can limit cpuset, cpu, I/O, memory and pids.

To modify the cgroup.subtree_control, can use plus sign(+) or minus sign(-) to enable or disable controllers.

As in this example:

|

|

Enable cgroups v2 in Linux

First, check the current cgroups fs version with command:

|

|

For cgroup v2, the output is cgroup2fs

For cgroup v1, the output is tmpfs

If the output is tmpfs,

Edit grub config /etc/default/grub, append systemd.unified_cgroup_hierarchy=1 to config GRUB_CMDLINE_LINUX.

|

|

Then execute sudo update-grub, after system reboot, the cgroups v2 will be enabled.

Configure cgroup to limit I/O resource

To create a new cgroup, use mkdir in /sys/fs/cgroup/

|

|

In nvme directory, we have

|

|

To enable the control to I/O resources, we need to enable the I/O controller in the /sys/fs/cgroup.subtree_control of root cgroup.

|

|

Dont’t use > to redirect output to this file (unles in sudo mode), because > shell process redirection first before running follwing command, so the sudo command did not take effect.

We also need to find out the major and minor number of block devices.

|

|

We will use the major and minor number of nvme0n1 device partition.

There also have other control keys:

riops: Max read I/O operations per secondwiops: Max write I/O operations per secondrbps: Max read bytes per secondwbps: Max write bytes per second

The lines of io.max must keyed by $MAJOR:$MINOR riops=? wiops=? rbps=? wbps=?

We can remove the limit of control by setting riops=max etc.

We limit the write bandwidth (wbps, write bytes per second) of io.max to 1 MB/s:

|

|

After configured the I/O limit of resources, now we need to add the process pid will be controled.

When execute this command, $$ means the pid of current shell :

|

|

Now, let’s use dd to generate I/O workload, before we start testing, clear the page cache first!

Clear the page cache:

|

|

Use dd to generate I/O workload:

|

|

Note that, the data had been writen to page cache immediately.

Using iostat to monitor the writeback performance, we can found that the MB_wrtn/s is limited to 1.0MB/s, which is a difference between cgroups v1 and v2 that cgroups v2 can limit the writeback bandwidth.

|

|

Furthermore, when add the direct oflag which means directly write data to device without page cache, the write bandwidth is limited to 1.0 MB/s.

|

|

Summary

In this article, we can learn about Cgroups in Linux, and how to use Cgroups to limit system I/O resources.

In a subsequent article, I will introduce some system API related to Cgroups.

Reference

- https://facebookmicrosites.github.io/cgroup2/docs/overview.html

- https://kubernetes.io/docs/concepts/architecture/cgroups/

- https://andrestc.com/post/cgroups-io/

- https://docs.kernel.org/admin-guide/cgroup-v2.html

- https://manpath.be/f35/7/cgroups#L557

- https://zorrozou.github.io/docs/%E8%AF%A6%E8%A7%A3Cgroup%20V2.html